This article is a chapter from my newest book, Fitness Science Explained, which is live now in our store.

If you want a crash course in reading, understanding, and applying scientific research to optimize your health, fitness, and lifestyle, this book is for you.

Also, to celebrate this joyous occasion, I’m giving away $1,500 in Legion gift cards! Click here to learn how to win.

***

“If I have seen further than others, it is by standing upon the shoulders of giants.”

—ISAAC NEWTON

You now know how science fundamentally works and the role that evidence plays in the scientific method.

You also know that not all evidence is created equal—there’s high- and low-quality evidence, and the ultimate goal of any line of research is the accumulation of high-quality evidence for a given hypothesis.

What determines the “quality” of evidence, though, and why do some kinds of evidence carry more weight than others?

Answering this question can seem all but impossible when you’re poking around online. Every time you think you know what a study means, someone else comes along and points out why another, better piece of evidence trumps that one.

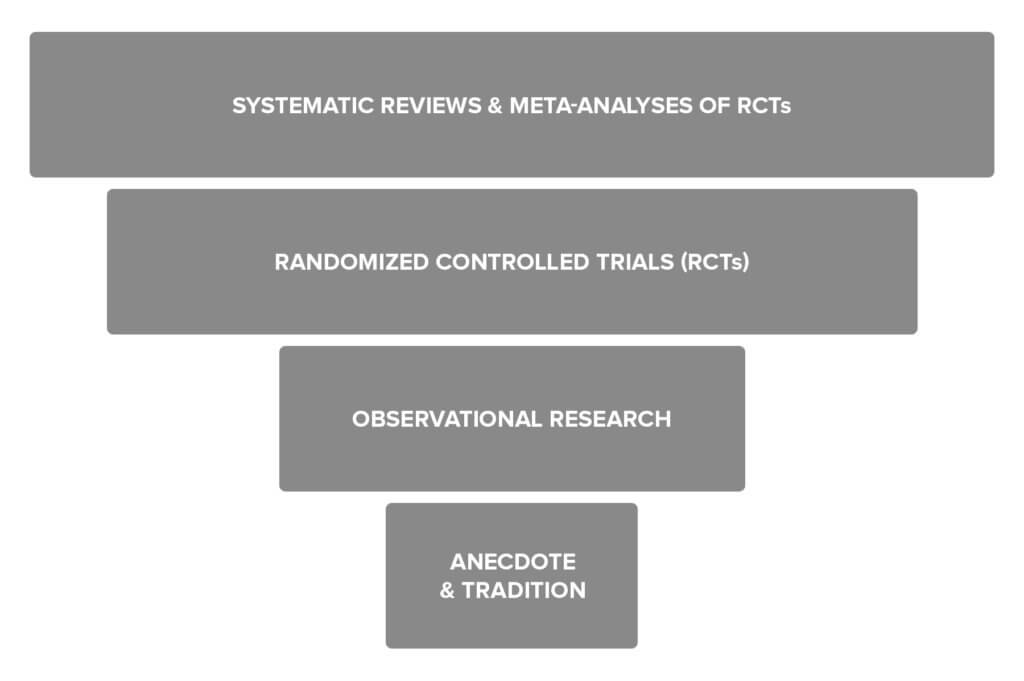

The good news is that scientists have a proven system for ranking evidence called the hierarchy of evidence. A hierarchy is “a system or organization in which people or groups are ranked one above the other according to status or authority.”

Here’s how the hierarchy of evidence works, with the highest-quality evidence at the top, and the lowest-quality at the bottom:

Let’s take a closer look at each, starting at the bottom, with the weakest form of evidence.

Anecdote and Tradition

Anecdote and tradition represent the lowest quality of evidence.

Anecdote refers to things like “it worked for me” or “my friend got great gains from using this supplement,” and tradition refers to things like “everyone does it this way” or “bodybuilders have always trained like this.”

These are valid forms of evidence.

The scientific method is relatively new, especially as applied to the fields of health and fitness, so anecdote and tradition are what most people relied on for years.

And it tends to get the basics right.

Ask many experienced bodybuilders and athletes what you should do to get in shape, and most will probably tell you “lift weights, do some cardio, and eat lots of fruits, vegetables, and lean meats.”

The problem, though, is that you’ll get plenty of conflicting opinions, too.

If you only rely on anecdote and tradition to make decisions about what’s true and what isn’t, then you can waste years second-guessing yourself, jumping from one diet, supplement, and exercise plan to the next.

Sound familiar?

The reason anecdote and tradition are considered low-quality evidence is because there are too many unknown variables and things that aren’t controlled.

For example, just because bodybuilders have traditionally trained a certain way doesn’t mean it’s the best way to train.

Many aspects of bodybuilding training are based on ideas that have been passed down from one generation to the next. People tend to engage in herd behavior, following what everyone else is doing even if it’s not necessarily optimal, so there may not be any truth to these things that “everyone knows” about bodybuilding.

Another example is that just because a particular supplement has worked for someone doesn’t mean the supplement generally works.

Perhaps the psychological expectation that the supplement would work resulted in the person training harder and paying more attention to diet, which would explain the better results.

Perhaps the person was simultaneously doing other things that were responsible for the improved gains.

Or, perhaps the individual was already improving his body composition and the supplement had nothing to do with it.

As you can see, anecdote and tradition occupy the bottom of the hierarchy of evidence for good reason.

Observational Research

Next on the hierarchy is observational research, which you briefly learned about earlier in this book.

With this type of research, scientists observe people in their free-living environments, collect some data on them, and then look for relationships between different variables. In the world of disease, this type of research is known as epidemiological research.

An example of this type of research would be where scientists take a very large group of people (think thousands), assess their dietary habits and body weight, and then review those numbers 10 years down the road. They may look at how many people had heart attacks during that 10 year period, and then use tools to see whether heart attacks could be related to diet.

This type of research is higher quality than anecdote or tradition because the data has been systematically gathered from large numbers of people and formally analyzed. However, as mentioned earlier, it can only establish correlations (relationships), not cause and effect.

For example, you might be able to show that higher fat intake is related to heart attack risk regardless of body weight. That doesn’t mean higher fat intake causes heart attacks, though, since there are other health variables (like diet and exercise) that can influence that relationship.

Scientists can try to use statistics to reduce the likelihood of other variables skewing the results, but they can never fully isolate one variable as the causative factor in an observational study.

In other words, they can point you in the right direction, but that’s about it. That’s what these studies are typically used for—justifying more expensive, detailed studies on a particular topic.

And that leads us to the randomized controlled trial.

Randomized Controlled Trials (RCTs)

Next, we have one of the highest quality forms of evidence: the randomized controlled trial, or RCT.

In an RCT, scientists take a group of people and randomly divide them into two or more groups (hence the term “randomized”). The scientists try to keep everything the same between the groups, except for one variable that they want to study, which is known as the independent variable.

Scientists change the independent variable in one or more groups, and then see how the people respond. One group may not receive any treatment, or may get a fake treatment (also known as a sham treatment or a placebo), and this group is often called the control group, hence the term randomized controlled trial.

For example, let’s say scientists want to see how creatine supplementation affects muscle strength. Here’s how the study could go:

The researchers recruit people for the study, and then randomly assign them to a high-dose creatine group, a low-dose creatine group, and a placebo or control group (a group that thinks they’re getting creatine but aren’t).

Before supplementation begins, everyone’s strength is measured on a simple exercise like the leg extension, and then all of the subjects do the same weight training program for 12 weeks while also taking the supplement or placebo every day.

After 12 weeks, leg extension strength is measured again and the data is analyzed to see if some groups gained more strength than others.

If the changes are significantly greater (more on significance soon, as this has a specific definition) in the creatine groups, the scientists can state that the creatine caused greater increases in strength compared to not taking creatine. They can also compare strength gains between the high-dose and low-dose creatine groups to see if a higher dose is more effective.

RCTs rank above observational research because their highly controlled nature allows them to establish cause and effect relationships.

In the above example, assuming the research is conducted honestly and competently, it’s scientifically valid to say that that study showed that creatine causes an increase in strength gain.

RCTs do have limitations, though.

One major factor is the number of subjects involved because, as you know, the smaller the sample size, the higher the risk of random findings.

Another is how similar the research environment is to real life. In some cases, the research environment can be so highly controlled that the results might not be applicable to the general population.

For example, many studies that look at appetite have subjects consume food in a lab environment. However, the number of foods and the amounts of food given in the lab can impact how much people eat. Thus, how people eat in the lab may not reflect how they eat in the real world.

By and large, though, a well-conducted randomized controlled trial is the most accurate tool we have for deciding what’s probably true and what isn’t.

So, what could be better than an RCT?

A bunch of RCTs.

Systematic Reviews and Meta-Analyses of RCTs

When a group of studies exists on a particular topic, a systematic review or meta-analysis can tell you where the weight of the evidence lies on that topic. This is why they occupy the top of the evidence pyramid.

Let’s unpack exactly what that means.

A systematic review is a type of study that involves gathering all of the research on a particular topic, and then evaluating it based on a set of predefined rules and criteria. In other words, the research is reviewed in a systematic fashion designed to minimize researcher biases.

This is different from a narrative review, where a researcher or researchers will informally gather evidence around a topic and share their opinions about what it means.

A meta-analysis is similar to a systematic review, but takes things one step further and does a formal statistical analysis of RCTs.

You can think of it as a “study of studies” because scientists gather a bunch of RCTs that fit predefined criteria, and then run complicated statistical analyses on them to give you an idea where the overall weight of the evidence lies.

Systematic reviews and meta-analyses represent the highest level of evidence because they gather the best RCTs and research on a topic and show you what that research generally agrees on.

For example, one systematic review and meta-analysis of weightlifting research looked at 21 studies comparing light loads (<60% 1-rep max) to heavy loads (>60% 1RM) and how those training plans impacted strength and muscle gain.

The analysis showed the gains in strength are greater with heavy loads compared to light loads, but gains in muscle size were similar as long as sets were taken to muscular failure. This type of analysis is superior to any individual study because it produces findings that are more likely to be true for most people.

While systematic reviews and meta-analyses represent the highest level of evidence, they still have flaws. Poor study selection or statistical analysis can lead to poor results because these types of studies are only as good as the data and methods used.

For instance, if you take a bunch of studies with mediocre results, combine them into a meta-analysis, and then run them through the right statistical tools, you can make the results look more impressive at first glance than they really are.

A good example of this is a 2012 meta-analysis on the effects of acupuncture on chronic pain that reported that acupuncture reduced pain 5% more than sham acupuncture (placebo).

When you look at the details, though, you discover that none of the highest-quality studies in the review showed significant effects, and the researchers included several studies with extreme results that were likely the result of bias or flawed research methods, as well as several smaller studies with a higher likelihood of false positive results.

Therefore, the study’s conclusion that “acupuncture is effective for the treatment of chronic pain” was misleading and oversold.

#

You’ve probably asked yourself at one time or another, “With all of the conflicting studies out there, how are you supposed to know what’s true and what’s not?”

Now you know the answer—by examining how robust evidence is according to the hierarchy, you can understand which claims are more likely to be true than others.

You can know, for instance, that anecdotes online or even published case studies shouldn’t be weighted more than the results of RCTs, that the results of an individual RCT aren’t the final word on a matter, and that research reviews and meta-analyses can be scientific treasure troves.

***

This article is a chapter from my newest book, Fitness Science Explained, which is live now in our store.

If you want a crash course in reading, understanding, and applying scientific research to optimize your health, fitness, and lifestyle, this book is for you.

Also, to celebrate this joyous occasion, I’m giving away $1,500 in Legion gift cards! Click here to learn how to win.

Scientific References +

- Vickers, A. J., Cronin, A. M., Maschino, A. C., Lewith, G., MacPherson, H., Foster, N. E., Sherman, K. J., Witt, C. M., & Linde, K. (2012). Acupuncture for chronic pain: Individual patient data meta-analysis. In Archives of Internal Medicine (Vol. 172, Issue 19, pp. 1444–1453). NIH Public Access. https://doi.org/10.1001/archinternmed.2012.3654

- Schoenfeld, B. J., Grgic, J., Ogborn, D., & Krieger, J. W. (2017). Strength and hypertrophy adaptations between low- vs. High-load resistance training: A systematic review and meta-analysis. In Journal of Strength and Conditioning Research (Vol. 31, Issue 12, pp. 3508–3523). NSCA National Strength and Conditioning Association. https://doi.org/10.1519/JSC.0000000000002200

- Gosnell, B. A., Mitchell, J. E., Lancaster, K. L., Burgard, M. A., Wonderlich, S. A., & Crosby, R. D. (2001). Food presentation and energy intake in a feeding laboratory study of subjects with binge eating disorder. International Journal of Eating Disorders, 30(4), 441–446. https://doi.org/10.1002/eat.1105